Deep dive into deepfakes: Mapping the development of risk and deplatforming narratives of deepfake technology on news and social media

- Introduction

The term ‘deepfake’ refers to videos created by deep AI learning technology that involves the process of manipulating two or more source videos or photos of different persons (Maras and Alexandrou). The AI technology mimics the movements and expressions of one person and maps it on to the other to make it seem that the other person is originally acting or making a speech. The term can also be referred to as “face-swapping”, which initially was a more basic process of using programs like Photoshop to replace the image of a person’s face with another body. The practice of deepfaking was initially and extensively used in pornography in 2017, which swapped the faces of porn stars with those of famous female celebrities (Hasan and Salah). The term deepfake itself was named after a Reddit user who near the end of 2017 developed the machine learning algorithm that replaces celebrity faces onto porn stars (Goggin). This software was significant because it ultimately allowed almost anyone to quickly and easily produce high-quality and convincing altered videos. Multiple variations of the software including a desktop application FakeApp that used Google’s TensorFlow framework, as well as the Chinese app ZAO were later released in 2018 and 2019 respectively (Goggin). This exploded the r/deepfake subreddit with users creating and sharing their own creations and expanded its use case to not only cover pornography, but also politics. This event marked the beginning of widespread use of hyper-realistic deepfakes and its role as an impactful digital disinformation instrument that could be employed by any user.

The rise of deepfake popularity ultimately led to various platforms such as Twitter, Gfycat, and Pornhub banning its use and associated communities. The r/deepfake subreddit was also banned in February 2018 as Reddit updated its policy to ban pornographic deepfakes (Goggin; Matsakis). In 2019, the Cyberspace Administration of China enforced a new law which makes it a criminal offense to publish deepfakes as it threatens the country’s social and political state due to the virality of deepfake mobile applications (Statt). To combat deepfakes, platforms are extensively seeking solutions to develop new ways to detect, identify, and moderate deepfake content, such as the use of artificial intelligence or blockchain technology (Martinez). The traditional method of banning and moderating deepfakes will be a difficult task for content moderators as it gets harder to detect with the naked eye due to the rapid development of deep learning technology (Benjamin), with experts arguing the technology may be ‘perfected’ by the end of the year (Stankiewicz). Deepfakes can potentially blur the line between what is real and fake as it becomes imperceptible and complicates the notion of trust as everything will potentially be assumed as fake. Deepfakes can ultimately strengthen the landscape of fake news as platforms need to take preliminary action to counter its existence, especially in light of the importance of global and national political events that may be disturbed by the presence of deepfakes (Metz).

This research looks closer into the development of deepfake technology as a risk narrative, and the extent to which deplatforming is being used as a solution to the development of this risk. This is done through the research question: “What are the risk narratives surrounding the deplatforming of deepfakes?” Deplatforming refers to the act of removing users, groups, or entire narratives from online platforms as a means of silencing them and their related discourses. As the narrative of deepfakes being framed as a risk spread rather quickly, platforms were relatively eager to take action in vowing to ban deepfake content on their platform.

There are however already some apparent discrepancies between platform owners vowing to remove deepfake content, and the actions they take to achieve this. As pointed out by The Next Web’s Bryan Clark, while for example PornHub vowed to ban deepfake videos from the platform, the action they took was removing all search results from the [deepfake] query, while none of the actual deepfake videos have been removed, even to this day (The Next Web, 2018). Similarly, while Reddit has at this point banned every deepfake subreddit related to pornography, other safe-for-work deepfake subreddits remain active to this day, which says something about the extent to which deepfakes are being perceived as a risk by Reddit’s moderators and administrators. A possible explanation for this discrepancy stems from Ulrich Beck’s book ‘World at Risk’. He writes: “The less calculable risk becomes [...] the more weight culturally shifting perceptions of risk acquire, with the result that the distinction between risk and the cultural perception of risk becomes blurred.” (Beck, 2008). Since deepfake pornography videos seem to be the cause for the technology’s notoriety, focus on this single aspect of the technology’s capabilities has perhaps shifted and then blurred the cultural perception of the risk of deepfakes to be focused around this single phenomenon.

Doing this risk cartography research requires us to use a theoretical framework that lends itself for it. Beck and Kropp’s 2011 paper identifies ‘four main types of entities in a controversy: protagonists (who is involved?), matters of concern (what is at stake?), statements (what are the knowledge claims, and what are we afraid of?) and things (what can be done?).” (Beck and Kropp, 2011). Within the context of this research, we identify platform owners, platform users and news media as the protagonists; these groups are our main object of study. Since there seems to be, to some extent, consensus regarding the matters of concern surrounding deepfakes, namely their status as a multifaceted privacy risk, as well as consensus regarding the things that can be done about deepfakes, namely deplatforming, we instead focus on the statements made by the various protagonists and how these develop over time, since these statements are the most defining factor for deepfakes’ status as a risk narrative.

Methodology

In order to effectively answer the research question and go about finding how the discourse around deplatforming deepfakes has fared in the past five years, a methodology comprising of data extraction tools like the Lippmannian device and Twitter Capture & Analysis Tool (by the Digital Methods Initiative), a text analysis software named Ant-Conc, and a visualization tool like DataWrapper was designed.

To determine the scope of how long our analysis can be, or how many years of discourse around deepfakes we should consider, a simple query on the search engine Google was done using the keywords [deepfake] and [deepfakes]. After a brief glance through the search outputs across the first few pages, it becomes apparent that most of the outputted results were either news articles or YouTube videos that were mainly published during the years 2017, 2018, and 2019. The oldest results were traced back to 2015 and 2014 in correlation to the keyword [face swap] (a simpler and broader word used while referring to deepfakes before it became mainstream in 2017), which sheds light on the fact that the discourse around deepfakes might have started in the past five years, hence, the timeframe of 2015 to 2019 was chosen for the first part of the study.

The first and foremost task was to examine whether ‘deepfakes’ as a concept in general and ‘deplatforming deepfakes’ in specific were globally rising risks/phenomena. To do this, the tool Lippmannian Device was made use of. Lippmannian Device is a data extraction tool built by the internet-research group Digital Methods Initiative (DMI). The tool queries for keywords among inputted websites across different search engines. It is also designed to do the aforementioned tasks in specific queried time frames. Using the tool, two specific keywords, [deepfake(s)] and [face swap(s)] were queried in ten different technology news websites for the years 2015 to 2019. Deepfakes being a very “technological” phenomena, tech news sites were considered to cover the matter more than general news websites, hence the reason for choosing tech news sites. To curate the list of top ten tech-news sites, a simple query using the keyword [Deepfakes] was done on the search engine Google and the first appearing ten unique tech news sites were chosen for the study. The sites are listed below in figure 1.

Figure 1: List of the ten tech news websites

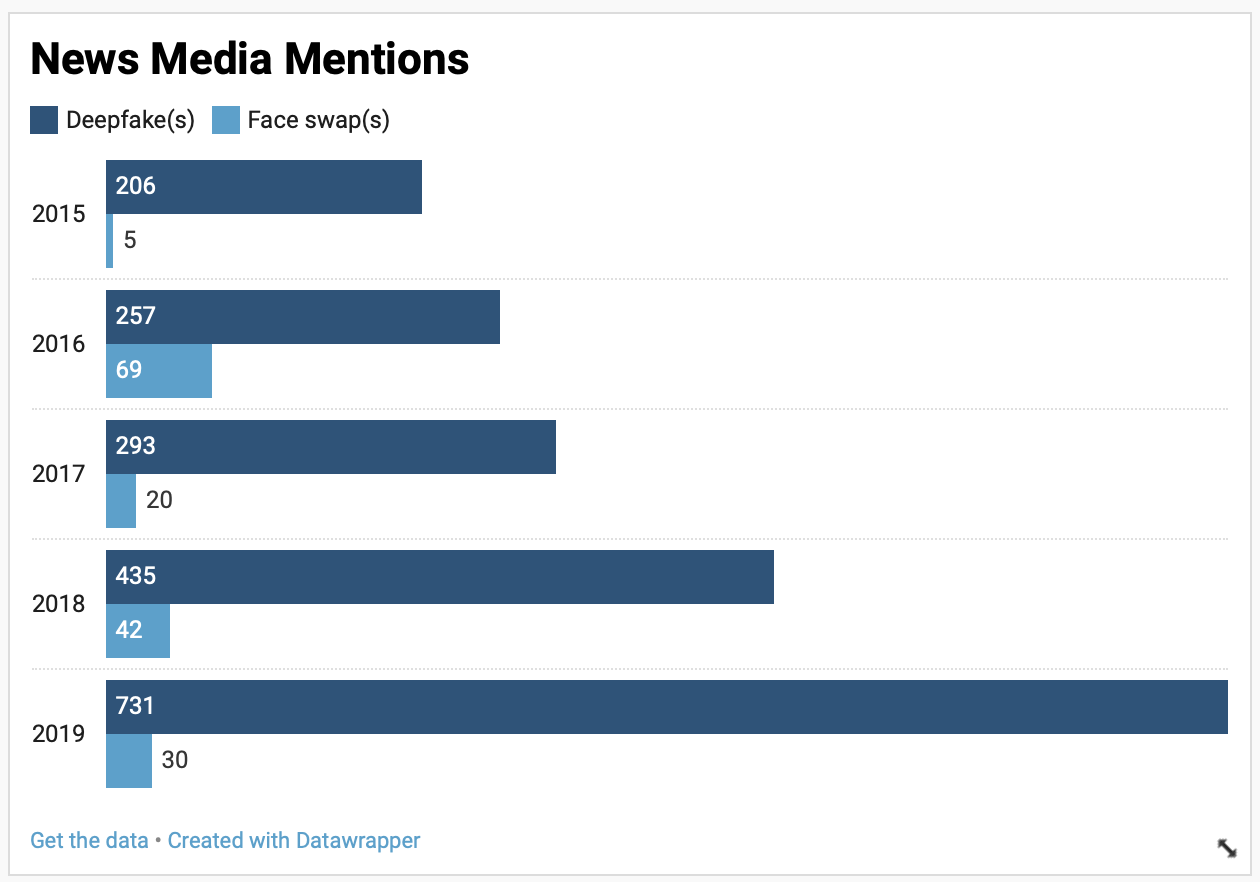

After this, the websites were inputted in the URLs box of the Lippmannian Device and the two queries [deepfake OR deepfakes] and [face swap OR face swaps] were queried yearly five times. The results hinted that the concept of ‘deepfakes’ was barely used in the year 2015 (thus returning a low number of keyword-mentions by the tech news sites) and rises to gain huge prominence as time progresses, hence establishing the fact that the discourse of deepfakes has experienced a stark rise in the past five years. Also, a closer qualitative reading of randomly selected five news articles every year established the fact that the discourse has mainly been around the risks posed by deepfakes, i.e., whether or not they are good for society overall in a variety of use cases.

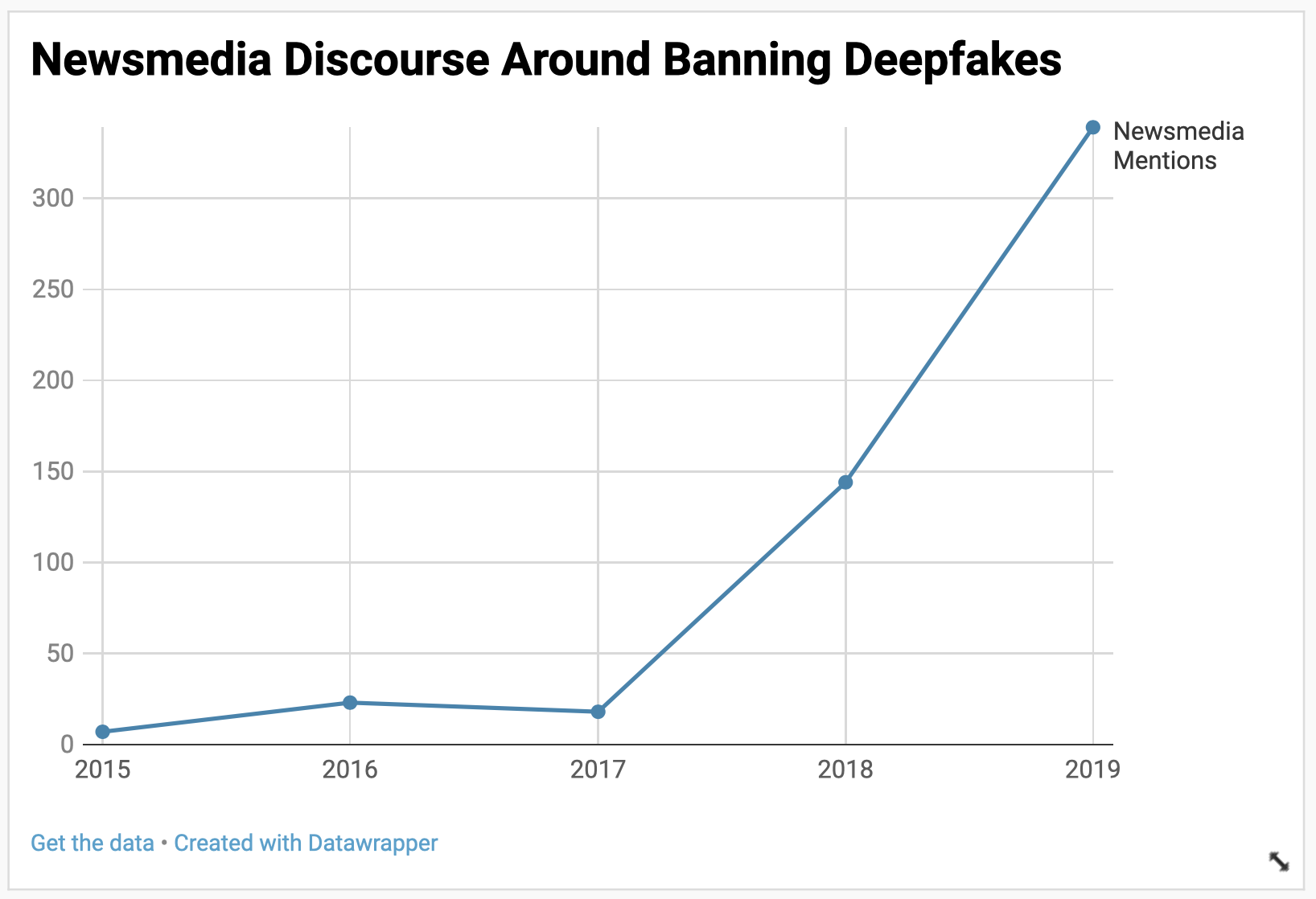

The second task was to examine whether the rising risks of deepfakes is also leading to a discourse where the tech-news websites also talk about ‘deplatforming’ or ‘banning’ deepfaked content on the internet. To achieve this, co-keyword data extraction was done with the above listed ten tech news sites for the years 2015 to 2019. Queries like [deepfakes AND banned] and [deepfakes AND ban] were made to know the number of mentions the keywords [deepfakes] and [banned] get together. The results pointed at the fact that the discourse around banning (or deplatforming) deepfakes was a concept largely unfamiliar in 2015, but a widely popular concept in the years 2018 and 2019, thus establishing the fact that deplatforming deepfakes was increasingly being talked about by the chosen tech news sites. The findings of both the above-mentioned tasks were visualized for further inspection using the tool DataWrapper, a site that allows for various types of basic visualizations like Bar Graphs, Bubble Charts etc., upon uploading the data in CSV format.

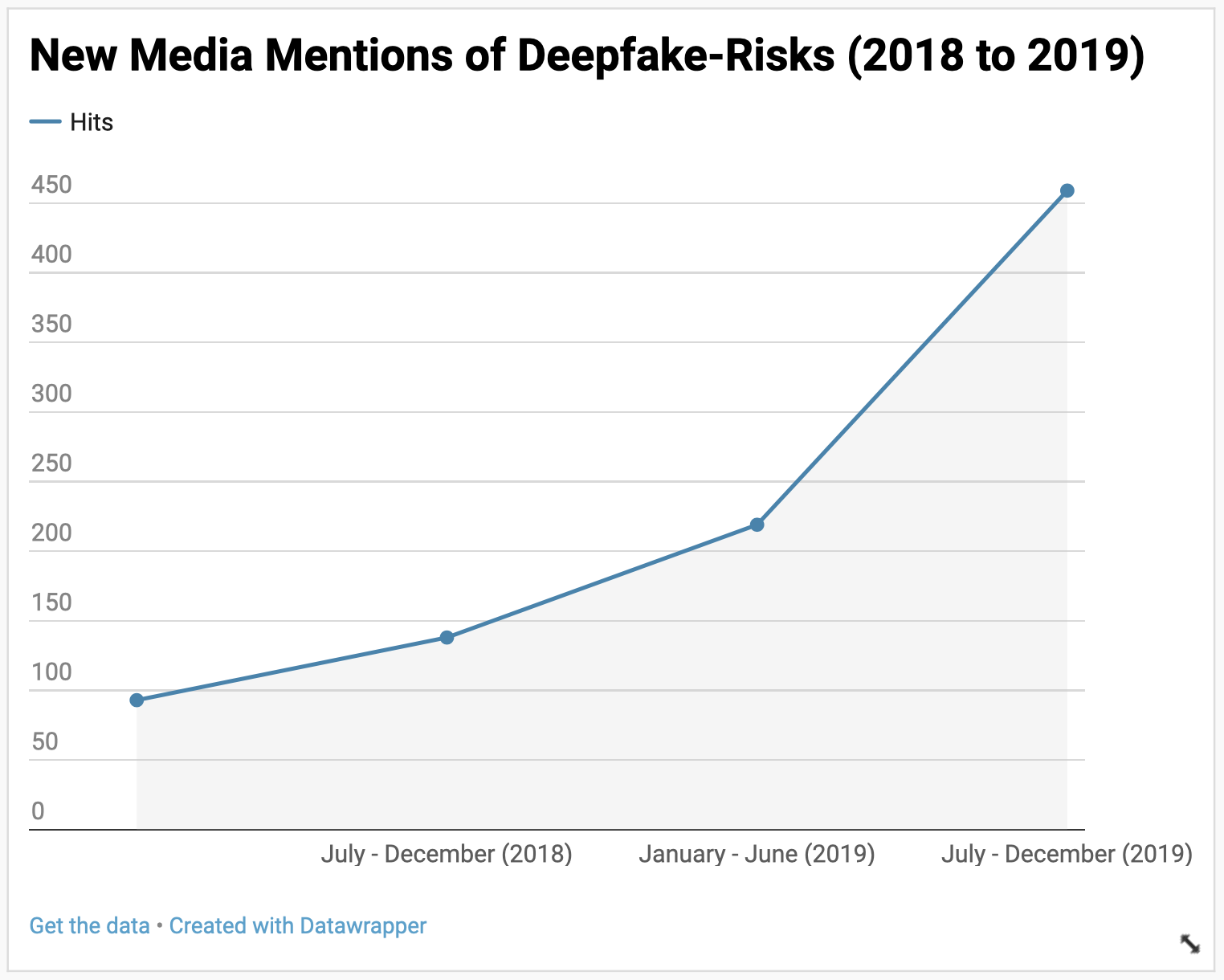

To build on this, we conducted an additional query with the Lippmannian device in order to conceptualise the extent to which risk narratives are acquiring media presence since the term deepfake had become widely used in 2017. For this, a snowballing technique was used for an associative search engine query [deepfake AND risk] to expand the list of news sources beyond the scope of tech-related news. This list of 73 sources was then used in the Lippmannian device with the same query for four timeframes in 2018 and 2019 to further display the development of the term deepfakes and the risks associated with them in recent years. Furthermore, this query aligns with the time period and methods used for the final steps in this research.

Now that we know the phenomena of deplatforming deepfakes is rising, the next task was to know what exactly is being talked about, as well as what the key points are. Mainly, what does the general audience think about deepfakes. Ideally, Reddit happens to be the best place to analyze what the audience thinks about deepfakes, reason, Reddit is known as the place where the concept of deepfakes emerged, in a subreddit called r/deepfakes, thousands of deepfaked videos of celebrities surfaced and members of the subreddit shared their views on the same, but, the subreddit was banned in the year 2018 fearing the adverse usage of it. Twitter is a platform increasingly known for its political nature; hence a calculated assumption was made that Twitter could be the place where a lot of discourse around deepfakes and its risks would be discussed. Upon a simple query for the keyword [deepfakes] on twitter, thousands of tweets from the past few years surfaced, thus letting us proceed with studying the same. A data extraction and analysis tool for Twitter, named TCAT was employed for the tasks ahead. TCAT is a tool built by the DMI, the tool allows its users to capture tweets from a specific timeframe and further do multiple types of analysis including hashtag frequency, keyword frequency, user frequency etc. While doing the close readings of the news articles on the risks posed by deepfakes, the issue of ‘privacy intrusion’ is something that appeared a lot. Hence, the already available ‘privacy’ TCAT dataset by DMI was used to extract tweets made using the keyword [deepfake] and [deepfakes].

This was done for all five years of our study. Once we possessed all the tweets made using the keyword [deepfakes] from 2015 to 2019, it was noticed that there were just two tweets for the years 2015, 2016, & 2017. This happened because the dataset we used had the ‘privacy keyword’ filter already and then we added another filter by querying for deepfakes, hence limiting the number of tweets made around deepfakes (one of the limitations of the dataset used). However, there were thousands of tweets extracted for the years 2018 and 2019. These two years were divided into four periods of six months each to know how the discourse evolves, moreover, if the risks that were mentioned have evolved or not.

All these tweets were then turned into a large corpus of text and uploaded to a software named AntConc. AntConc is a text-analysis software developed by Dr. Lawrence Anthony, a linguist and professor of Engineering & Science. Upon querying a keyword, the software scans through large corpuses of texts and shows us the specific contexts in which the keyword was used. Here, in order to know the risks of deepfakes, we queried the keyword [deepfakes] in such a manner that every tweet with context words like [risks], [challenges], [threats], [dangers] and other synonyms of the word ‘risks’ came up, thus showing us all the tweets where people talk about the risks posed by deepfakes. A close reading of all these tweets was done, and also looked at whether the risks evolve or change as time progresses.

Results & discussion

Figure 2: Frequency of keywords deepfake and face swap on top ten tech new websites from 2015-2019

According to figure 2, the frequency of the keyword [deepfake] on ten of the selected tech news websites almost doubled in 2019 compared to 2018 due to the influx and virality of face-swapping apps. The main discourse involves the Chinese face-swapping application ZAO, which effortlessly allows anyone to create highly realistic deepfakes through AI technology with the use of self-taken or downloaded images and videos. The virality of the app was ultimately scrutinized for the creation of misleading videos that blur the truth. However, in 2018 we notice that the discourse around deepfake technology begins to develop as the risk concerning the misuse and exploitation of technology are being discussed. Prior to 2015, the use case of the word deepfake is barely mentioned by these news outlets, partly due to its origins on Reddit, therefore we decided to use the term face swap as a constant variable to compare its use case as it predates the origin of the term deepfake. For the term face swap itself, its use case was much lower as the term deepfake becomes the dominant term associated with the discourse as time progresses. Overall, the term deepfake is still an evolving discourse as its frequency will continue to increase as facial recognition technology evolves, and more actors or institutions get affected or try to regulate it.

Figure 3: Frequency of the discourse surrounding the banning of deepfakes from 2015-2019

Figure 3 represents the frequency of the term deepfake in association with the discourse of deplatforming on the same ten tech news websites as figure 2. Here we notice a sudden spike in 2018, which was when Pornhub enforced its policy to start banning nonconsensual deep-faked videos on the platform, as well as the subreddit r/deepfakes which also included similar obscene content. In 2019 was when China passed on its law that makes publishing deepfakes a criminal offense as it disrupts social order and creates political risks that is seen as a threat to national security and social stability. The development of advanced AI technology and its accessibility on open source websites made platforms consider deplatforming the use of deepfakes as of 2018 and the discourse continues as firms are also developing tools to detect and moderate the use of deepfaking technology.

Figure 4: Frequency of the discourse surrounding the risks of deepfakes from 2018-2019. Each period comprises half a year of data.

Figure 4 represents the frequency of the term deepfake and risks among 73 news websites that were scraped by the search engine scraper and manually filtered out. Different from figure 3, it only covers the years 2018 and 2019, which was when the discourse around deepfakes was the highest. There was a massive spike in period four concerning the risk of deepfakes as its use case became much more prevalent on mainstream media; this also coincides with the release of the above mentioned deepfake app ZAO. Period four is when the new risks of deepfakes emerged in its use case on social media platforms as compared to previous periods that mainly addressed the risk of reputational harm on pornography sites and community platforms like Reddit. This results in news outlets writing more articles about deepfakes that addresses the social and political risks that have emerged after deepfake technology became mainstream. Period four was also when major social media platforms like Twitter and Facebook announced their stance to combat and moderate the use of deepfakes by addressing the major risks associated with it.

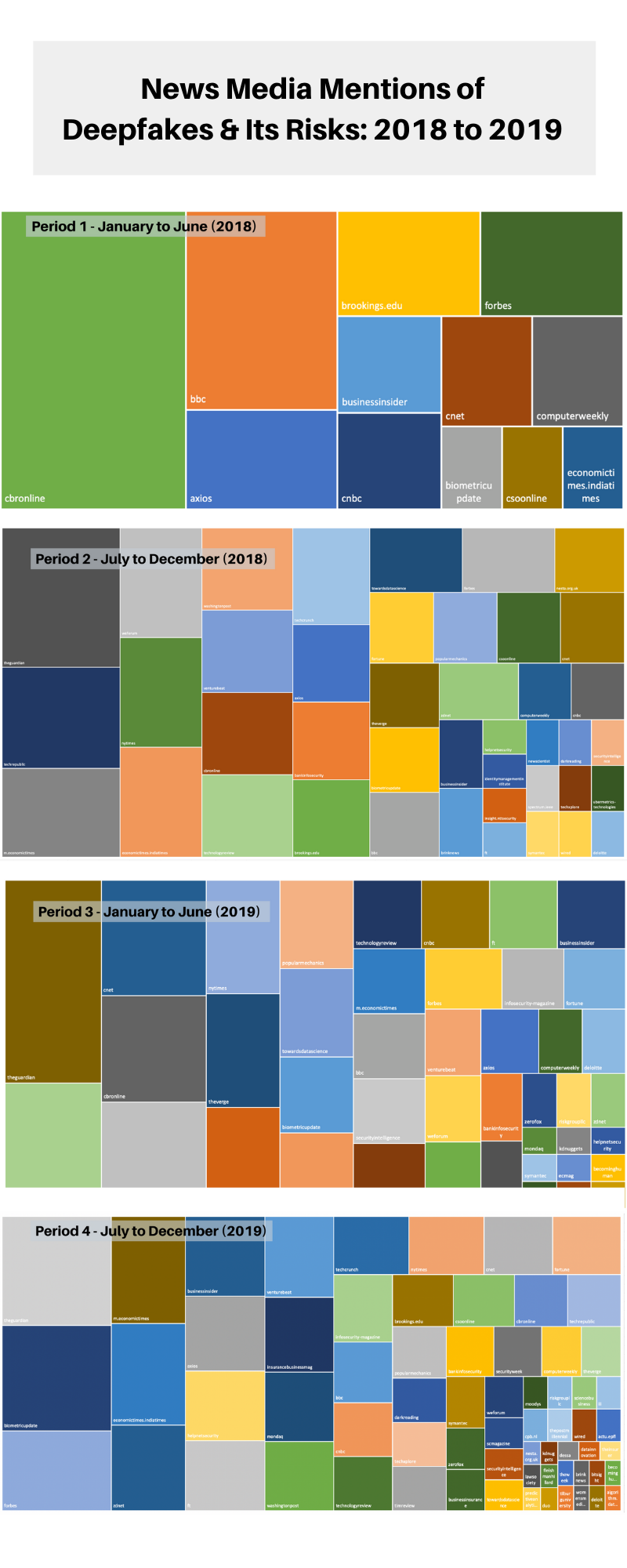

Figure 5: News media mentions of deepfake and its risk from 2018-2019

According to figure 5, throughout all four periods we notice how the rise of websites mentioning the risk of deepfakes is a testament to how the issue is gaining prominence. It can be noted that during period one there were only a few websites that covered the risks of deepfakes, as the majority of the sites were tech websites and the minority were general news websites. However, as the usage of deepfake became mainstream during the fourth period, there were more general news websites and other sites covering the issue in comparison to the amount of tech websites. With more websites covering the risks of deepfakes it also signifies how the different categories of risk have expanded and developed throughout each period in accordance with the context of tweets. As the usage of deepfakes became mainstream and the categories of risks expanded the discourse of moderating and deplatforming deepfakes also emerged in response to its political risks.

Concordance analysis of TCAT privacy dataset

Period 1: January-June 2018

In the first period, most tweets are concerned with the privacy aspect of deepfake videos, as well as the legal implications of deepfake videos circulating online. Most Twitter users seem to be concerned about what laws should be made in order to protect people’s privacy, while there are some others who point out that, for example in the United States, laws that are already in place to protect people from extortion or harassment through deepfake usage. In an article linked in one of the tweets, David Greene writes: “The US legal system has been dealing with the harm caused by photo-manipulation and false information in general for a long time, and the principles so developed should apply equally to deepfakes.” (Greene, 2018) Further, there are some other users who argue that the rise of deepfake popularity could be seen as an undermining of the surveillance state, seeing as the legitimacy of photo and video evidence seem to become more contented as this evidence could perhaps soon be argued to be unreliable due to deepfakes existing.

Period 2: July-December 2018

The tweets made during the months of July to December (2018) are still largely concerned with the privacy intrusion aspect of the risks posed by deepfakes. A study conducted by Associate Professor of the Royal Melbourne Institute of Technology, Ms. Nicola Henry, which talks about how deepfakes are a growing tool used to sexually exploit, harass, and blackmail women was shared widely. According to the tweets, the study found that deepfakes are rising to be an invasion of female privacy and a violation of the right to dignity, sexual autonomy, and freedom of expression. The major takeaway here is, the year 2018 is not just the time when discussions surrounding the risks posed by deepfakes took mainstream, but the risks also received a sizeable amount of attention from the academic field, the results of which became a talking-point for users of twitter. Among other implications of deepfakes, the looming threat they hold to the democratic functioning of the society, with specific usage of them in political campaigns and how politicians/others could employ deepfakes to spread propaganda were discussed.

Period 3: January-June 2019

In this period, the majority of the tweets classify deepfakes as a risk in terms of artificial intelligence. This is mostly tied to the discourse of deepfakes emerging organically on surveillance cameras through AI camera technology with ties to Huawei’s Moon Mode. The controversy of this mode is that it can artificially augment elements of a photo without fully disclosing what has been altered. There were also a few mentions of how deepfakes are not just a risk to personal privacy but also to democracy and fraud. This has also been tied to the notion of trust and how deepfakes can complicate the landscape of factual and fake news. Multiple tweets also refer to a deepfake of Mark Zuckerberg and how it imposes a challenge to the moderation and banning of deepfakes on social media platforms like Facebook. This ties into the main discourse of deplatforming deepfakes on social media platforms as part of the larger narrative on the risk of misinformation. Overall, the tweets of this period mainly establish the risks of deepfakes to self learning AI technology and how the use of AI enabled surveillance cameras have the capability to organically alter images.

Period 4: July-December 2019

This then results in period 4, which culminates the most recent developments in risk narratives surrounding deepfakes. Interestingly, this period showcases a wider variety of risk factors and larger degree of urgency in tweets due to a variety of reasons. While the release of Chinese app ZAO and face swapping app FaceApp were at the centre of attention for many arguments, it brings forth different issues with deepfake distribution.

One major issue that was touched upon was the topic of privacy, which is typically extended to identity fraud and copyright infringement of intellectual property, which is respectively turned into arguments of classifying deepfakes as fair use under. While the use of photos to develop deepfakes with apps has stirred up debate, the most notable topic of debate is related to the upcoming 2020 U.S. elections and the risk deepfakes pose to the dissemination of misinformation and general election interference that may come from the use of the technology. This holds especially true due to the existing concerns some individuals may already have after the 2016 U.S. elections, which dealt with Russian election interference already (Mueller). As such, the urgency and direct implications of deepfakes is the main focus in this period, also partly due to increased news presence and media attention the topic has seen.

Conclusion

In this report, we discuss the development of both the narratives surrounding the risks of deepfakes, as well as the process of deplatforming their use and distribution. Several platforms have opted to restrict the distribution of deepfakes; however, due to their inherent qualities the detection and enforcement of this deplatformisation is limited at best. By comparing the media presence of the topic and the variety of different sources to the overall tone and narrative found on Twitter, we establish the overall risk development of deepfakes and their position in the public sphere.

While the term ‘deepfake’ became widely used in 2017 and 2018, the concept has been around for longer, albeit often under different terms such as ‘face swap’, differentiated by the use of artificial intelligence. As such, one can see that the narrative and risks associated with deepfakes has grown from the aspect of privacy intrusion and identity to a wider discussion concerning artificial intelligence software and deployment. Furthermore, as the technology has matured and garnered a larger presence in a wider variety of news sources over the last two years, we observe larger scope of discussion, connecting the theoretical concerns and consequences deepfake use might have to practical and real-life situations that could suffer from this, most notably the upcoming 2020 U.S. elections. However, it is noteworthy that previous concerns and risks associated with deepfakes have not disappeared; instead, they have evolved to be all-encompassing and the discourse surrounding them has grown significantly in terms of the general understanding of different actors. We expect this trend to continue as the use of deepfakes becomes more widespread and media presence of the topic grows further.

One major limitation of this research is the trendiness of the discourse revolving the use case of deepfakes. The controversy and risk surrounding deepfakes is constantly evolving in relation with AI and facial recognition technology. The role of platforms addressing and moderating deepfakes is also an upcoming concern as Facebook just announced in January of 2020 that it will be joining Facebook’s categories of banned content (Edelman). Twitter as a political platform is also on the verge of banning deepfakes that are “likely to cause harm” (Fisher). The trend of platforms taking actions upon the use of deepfakes may correlate to the upcoming 2020 US presidential elections by taking preliminary actions to prevent political deepfake scandals that will spread misinformation and potentially impact the outcome of the election. Thus, within the next five years it would be interesting to repeat this study and take a comparative approach to define how the narrative has changed by outlining new actors and identifying the evolution of risk networks. The important discourse will then be about how certain actors take action to dismiss certain categories of risks concerning the use of deepfakes that are still apparent today.

0 Comments Add a Comment?